Sam Biddle reports in The Intercept:

Facebook can comb its user base of 2 billion and produce millions “at risk” of jumping from one brand to a competitor. These individuals could be targeted with advertising (to) pre-empt and change their decision, (which) Facebook calls “improved marketing efficiency.”Location, device, Wi-Fi network details, video usage, affinities, and friendships can be fed to run a simulation, then sold. The company describes this as “Facebook’s Machine Learning expertise” for “core business challenges.” Once they’ve made this prediction, they have a financial interest in making it true.

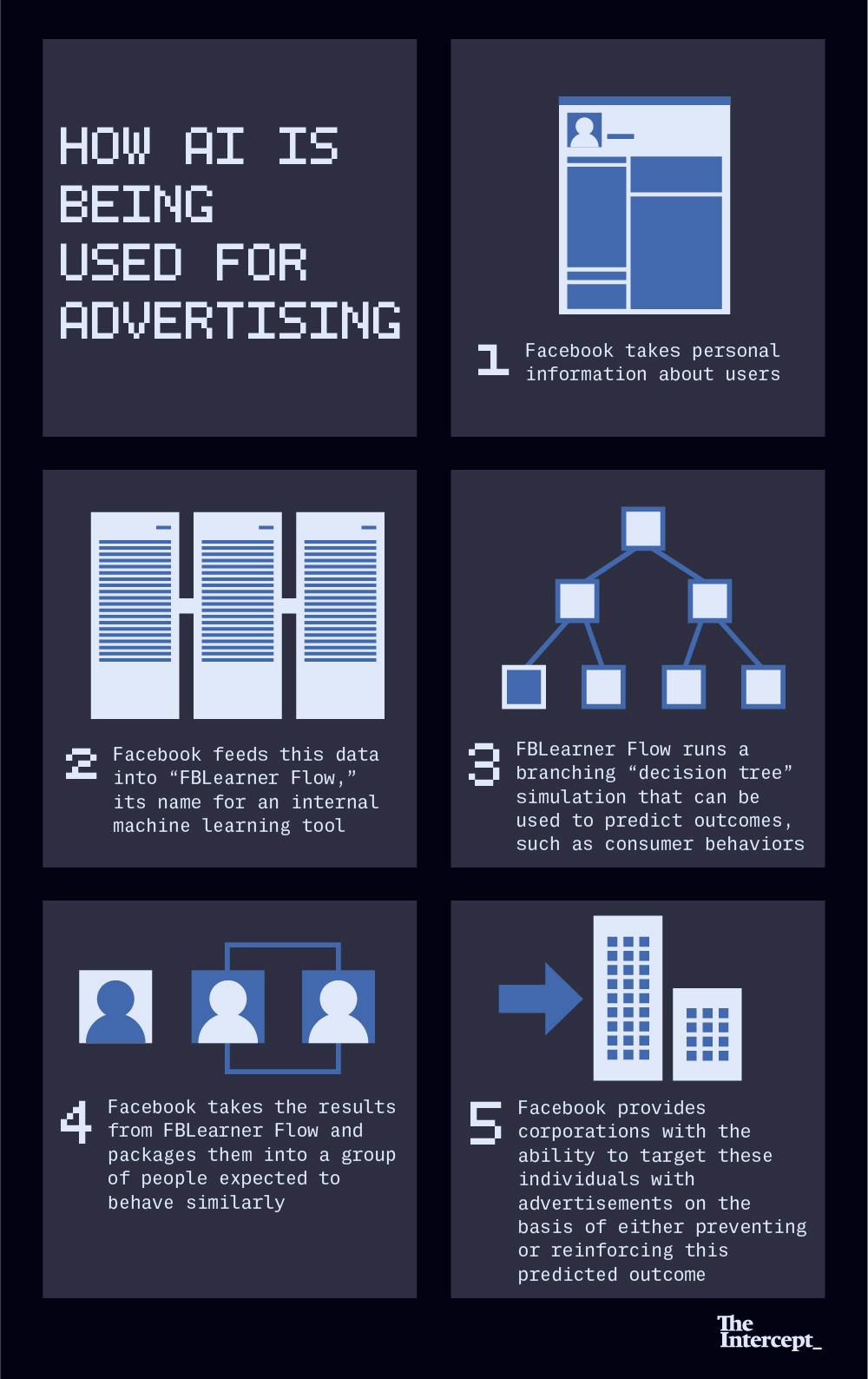

Since the Cambridge Analytica scandal erupted in March, Facebook has been attempting to make a moral stand for your privacy, distancing itself from the unscrupulous practices of the U.K. political consultancy. “Protecting people’s information is at the heart of everything we do,” wrote Paul Grewal, Facebook’s deputy general counsel, just a few weeks before founder and CEO Mark Zuckerberg hit Capitol Hill to make similar reassurances, telling lawmakers, “Across the board, we have a responsibility to not just build tools, but to make sure those tools are used for good.” But in reality, a confidential Facebook document reviewed by The Intercept shows that the two companies are far more similar than the social network would like you to believe.The recent document, described as “confidential,” outlines a new advertising service that expands how the social network sells corporations’ access to its users and their lives: Instead of merely offering advertisers the ability to target people based on demographics and consumer preferences, Facebook instead offers the ability to target them based on how they will behave, what they will buy, and what they will think. These capabilities are the fruits of a self-improving, artificial intelligence-powered prediction engine, first unveiled by Facebook in 2016 and dubbed “FBLearner Flow.”One slide in the document touts Facebook’s ability to “predict future behavior,” allowing companies to target people on the basis of decisions they haven’t even made yet. This would, potentially, give third parties the opportunity to alter a consumer’s anticipated course. Here, Facebook explains how it can comb through its entire user base of over 2 billion individuals and produce millions of people who are “at risk” of jumping ship from one brand to a competitor. These individuals could then be targeted aggressively with advertising that could pre-empt and change their decision entirely — something Facebook calls “improved marketing efficiency.” This isn’t Facebook showing you Chevy ads because you’ve been reading about Ford all week — old hat in the online marketing world — rather Facebook using facts of your life to predict that in the near future, you’re going to get sick of your car. Facebook’s name for this service: “loyalty prediction.”Facebook explains it can comb through its 2 billion users and produce millions “at risk” of jumping ship from one brand to a competitor.Spiritually, Facebook’s artificial intelligence advertising has a lot in common with political consultancy Cambridge Analytica’s controversial “psychographic” profiling of voters, which uses mundane consumer demographics (what you’re interested in, where you live) to predict political action. But unlike Cambridge Analytica and its peers, who must content themselves with whatever data they can extract from Facebook’s public interfaces, Facebook is sitting on the motherlode, with unfettered access to staggering databases of behavior and preferences. A 2016 ProPublica report found some 29,000 different criteria for each individual Facebook user.Zuckerberg has acted to distance his company from Cambridge Analytica, whose efforts on behalf of Donald Trump were fueled by Facebook data, telling reporters on a recent conference call that the social network is a careful guardian of information:The vast majority of data that Facebook knows about you is because you chose to share it. Right? It’s not tracking. There are other internet companies or data brokers or folks that might try to track and sell data, but we don’t buy and sell. … For some reason, we haven’t been able to kick this notion for years that people think we will sell data to advertisers. We don’t. That’s not been a thing that we do. Actually it just goes counter to our own incentives. Even if we wanted to do that, it just wouldn’t make sense to do that.The Facebook document makes a similar gesture toward user protection, noting that all data is “aggregated and anonymized [to protect] user privacy,” meaning Facebook is not selling lists of users, but rather essentially renting out access to them. But these defenses play up a distinction without a difference: Regardless of who is mining the raw data Facebook sits on, the end result, which the company eagerly monetizes, are advertising insights that are very intimately about you — nowpackaged and augmented by the company’s marquee machine learning initiative. And although Zuckerberg and company are technically, narrowly correct when they claim that Facebook isn’t in the business of selling your data, what they’re really selling is far more valuable, the kind of 21st century insights only possible for a company with essentially unlimited resources. The reality is that Zuckerberg has far more in common with the likes of Equifax and Experian than any consumer-oriented company. Facebook is essentially a data wholesaler, period.The document does not detail what information from Facebook’s user dossiers is included or excluded from the prediction engine, but it does mention drawing on location, device information, Wi-Fi network details, video usage, affinities, and details of friendships, including how similar a user is to their friends. All of this data can then be fed into FBLearner Flow, which will use it to essentially run a computer simulation of a facet of a user’s life, with the results sold to a corporate customer. The company describes this practice as “Facebook’s Machine Learning expertise” used for corporate “core business challenges.”Experts consulted by The Intercept said the systems described in the document raise a variety of ethical issues, including how the technology might be used to manipulate users, influence elections, or strong-arm businesses. Facebook has an “ethical obligation” to disclose how it uses artificial intelligence to monetize your data, said Tim Hwang, director of the Harvard-MIT Ethics and Governance of AI Initiative, even if Facebook has a practical incentive to keep the technology under wraps. “Letting people know that predictions can happen can itself influence the results,” Hwang said.Facebook has been dogged by data controversies and privacy scandals of one form or another — to an almost comedic extent — throughout its 14-year history. Despite year after year of corporate apologies, nothing really changes. This may be why the company has thus far, focused on the benign, friendly ways it uses artificial intelligence, or AI.

FBLearner Flow has been publicized as an internal software toolset that would help Facebook tune itself to your preferences every time you log in. “Many of the experiences and interactions people have on Facebook today are made possible with AI,” Facebook engineer Jeffrey Dunn wrote in an introductory blog post about FBLearner Flow.Asked by Fortune’s Stacey Higginbotham where Facebook hoped its machine learning work would take it in five years, Chief Technology Officer Mike Schroepfer said in 2016 his goal was that AI “makes every moment you spend on the content and the people you want to spend it with.” Using this technology for advertising was left unmentioned. A 2017 TechCrunch article declared, “Machine intelligence is the future of monetization for Facebook,” but quoted Facebook executives in only the mushiest ways: “We want to understand whether you’re interested in a certain thing generally or always. Certain things people do cyclically or weekly or at a specific time, and it’s helpful to know how this ebbs and flows,” said Mark Rabkin, Facebook’s vice president of engineering for ads. The company was also vague about the melding of machine learning to ads in a 2017 Wired article about the company’s AI efforts, which alluded to efforts “to show more relevant ads” using machine learning and anticipate what ads consumers are most likely to click on, a well-established use of artificial intelligence. Most recently, during his congressional testimony, Zuckerberg touted artificial intelligence as a tool for curbing hate speech and terrorism.But based on the document, the AI-augmented service Facebook appears to be offering goes far beyond “understanding whether you’re interested in a certain thing generally or always.” To Frank Pasquale, a law professor at the University of Maryland and scholar at Yale’s Information Society Project who focuses on algorithmic ethics, this kind of behavioral-modeling marketing sounds like a machine learning “protection racket.” As he told The Intercept:You can think of Facebook as having protective surveillance [over] how competitors are going to try to draw customers. … We can surveil for you and see when your rival is tying to pick off someone, [then] we’re going to swoop in and make sure they’re kept in your camp.Artificial intelligence has more or less become as empty a tech buzzword as any other, but broadly construed, it includes technologies like “machine learning,” whereby computers essentially teach themselves to be increasingly effective at tasks as diverse as facial recognition and financial fraud detection. Presumably, FBLearner Flow is teaching itself to be more accurate every day.Facebook is far from the only firm scrambling to turn the bleeding edge of AI research into a revenue stream, but they are uniquely situated. Even Google, with its stranglehold over search and email and similarly bottomless budgets, doesn’t have what Zuckerberg does: A list of 2 billion people and what they like, what they think, and who they know. Facebook can hire the best and brightest Ph.D.’s and dump an essentially infinite amount of money into computing power.Facebook’s AI division has turned to several different machine learning techniques, among them “gradient boosted decision trees,” or GBDT, which, according to the document, is used for advertising purposes. A 2017 article from the Proceedings of Machine Learning Research describes GBDT as “a powerful machine-learning technique that has a wide range of commercial and academic applications and produces state-of-the-art results for many challenging data mining problems.” This machine learning technique is increasingly popular in the data-mining industry.Facebook’s keen interest in helping clients extract value from user data perhaps helps explain why the company did not condemn what Cambridge Analytica did with the data it extracted from the social network — instead, Facebook’s outrage has focused on Cambridge Analytica‘s deception. With much credit due to Facebook’s communications team, the debate has been over Cambridge Analytica’s “improper access” to Facebook data, rather than why Cambridge Analytica wanted Facebook’s data to begin with. But once you take away the question of access and origin, Cambridge Analytica begins to resemble Facebook’s smaller, less ambitious sibling.The ability to hit demographic bullseyes has landed Facebook in trouble many times before, in part because the company’s advertising systems were designed to be staggeringly automated. Journalists at ProPublica were recently able to target ads at dubious groups like self-identified “Jew haters.” Whether it’s a political campaign trying to spook a very certain type of undecided voters, or Russians spooks just trying to make a mess, Facebook’s ad tech has been repeatedly put to troubling ends, and it remains an open question whether any entity ought to have the ability to sell access to over 2 billion pairs of eyeballs around the world. Meanwhile, Facebook appears to be accelerating, rather than tempering, its runaway, black-box business model. From a company that’s received ample criticism for relying on faceless algorithms to drive its business, the choice to embrace self-teaching artificial intelligence to aid in data mining will not come as a comforting one.That Facebook is offering to sell its ability to predict your actions — and your loyalty — has new gravity in the wake of the 2016 election, in which Trump’s digital team used Facebook targeting to historic effect. Facebook works regularly with political campaigns around the world and boasts of its ability to influence turnout — a Facebook “success story” about its work with the Scottish National Party describes the collaboration as “triggering a landslide.” Since Zuckerberg infamously dismissed the claim that Facebook has the ability to influence elections, an ability Facebook itself has advertised, the company has been struggling to clean up its act. Until it reckons with its power to influence based on what it knows about people, should Facebook really be expanding into influencing people based on what it can predict about them?Jonathan Albright, research director at Columbia University’s Tow Center for Digital Journalism, told The Intercept that like any algorithm, especially from Facebook, AI targeting “can always be weaponized.” Albright, who has become an outspoken critic of Facebook’s ability to channel political influence, worries how such techniques could be used around elections, by predicting which “people … might be dissuaded into not voting,” for example.

“Once they’ve made this prediction, they have a financial interest in making it true.”One section of the document shines the spotlight on Facebook’s successful work helping a client monetize a specific, unnamed racial group, although it’s unclear if this was achieved using FBLearner Flow or more conventional methods. Facebook removed the ability to target ethnic groups at the end of last year after a ProPublica report.

Pasquale, the law professor, told The Intercept that Facebook’s behavioral prediction work is “eerie” and worried how the company could turn algorithmic predictions into “self-fulfilling prophecies,” since “once they’ve made this prediction, they have a financial interest in making it true.” That is, once Facebook tells an advertising partner you’re going to do some thing or other next month, the onus is on Facebook to either make that event come to pass, or show that they were able to help effectively prevent it (how Facebook can verify to a marketer that it was indeed able to change the future is unclear).The incentives created by AI are problematic enough when the technology is used toward a purchase, even more so if used toward a vote. Rumman Chowdhury, who leads Accenture’s Responsible AI initiative, underscored the fact that like Netflix or Amazon’s suggestions, more ambitious algorithmic predictions could not only guess a Facebook user’s behavior, but also reinforce it: “Recommendation engines are incentivized to give you links you will click on, not necessarily valuable information.”Facebook did not respond to repeated questions about exactly what kinds of user data are used for behavioral predictions, or whether this technology could be used in more sensitive contexts like political campaigns or health care. Instead, Facebook’s PR team stated that the company uses “FBLearner Flow to manage many different types of workflows,” and that “machine learning is one type of workflow it can manage.” Facebook denied that FBLearner Flow is used for marketing applications (a “mischaracterization”) and said that it has “made it clear publicly that we use machine learning for ads,” pointing to the 2017 Wired article.Problematic as well is Facebook’s reluctance to fully disclose how it monetizes AI. Albright described this reluctance as a symptom of “the inherent conflict” between Facebook and “accountability,” as “they just can’t release most of the details on these things to the public because that is literally their business model.”Facebook said it uses “FBLearner Flow to manage many different types of workflows.”But Facebook has never been eager to disclose anything beyond what’s demanded by the Securities and Exchange Commission and crisis PR. The company has repeatedly proven that it is able to fudge the facts and then, when reality catches up, muddle its way out of it with mushy press statements and halfhearted posts from Zuckerberg.And yet the number of people around the world using Facebook (and the company’s cash) continues to expand. One wouldn’t expect the same kind of image immunity Facebook has enjoyed — at least up until the Cambridge Analytica scandal — from, say, a fast-food corporation plagued by accusations of dangerous indifference toward customers. It’s possible that people don’t care enough about their own privacy to stay angry long enough to make Facebook change in any meaningful way.Maybe enough Facebook users just take it as a given that they’ve made a pact with the Big Data Devil and expect their personal lives to be put through a machine learning advertisement wringer. Hwang noted that “we can’t forget the history of all this, which is that advertising as an industry, going back decades, has been about the question of behavior prediction … of individuals and groups. … This is in some ways the point of advertising.” But we also can’t expect users of Facebook or any other technology to be able to decide for themselves what’s worth protesting and what’s worth fearing when they are so deliberately kept in a state of near-total ignorance. Chipotle is forced, by law, to disclose exactly what it’s serving you and in what amounts. Facebook is under no mandate to explain what exactly it does with your data beyond privacy policy vagaries that state only what Facebook reserves the right to do, maybe. We know Facebook has engaged in the same kind of amoral political boosting as Cambridge Analytica because they used to brag about it on their website. Now the boasts are gone, without explanation, and we’re in the dark once more.So while the details of what’s done with your data remain largely a matter of trade secrecy to Facebook, we can consider the following: Mark Zuckerberg claims that his company has “a responsibility to protect your data, and if we can’t, then we don’t deserve to serve you.” Zuckerberg also runs a company that uses your data to train AI prediction models that will be used to target and extract money from you on the basis of what you’re going to do in the future. If it seems difficult to square those two facts — maybe because it’s impossible.

0 comments:

Post a Comment