Data of whatever sort can be manipulated; the question is how to identify and address it. JL

Kris Holt reports in Engadget:

Synthetic prints could be most effective in bypassing a system with many fingerprints stored on it (as opposed to your phone, which probably has a record of a couple of your own digits). An attacker might have more chance of success through trial and error, similar to the way in which hackers run brute force or dictionary attacks against passwords.

Researchers from New York University have found a way to produce fake fingerprints using artificial intelligence that could fool biometric scanners (or the human eye) into thinking they're the real deal. The DeepMasterPrints, as the researchers are calling them, replicated 23 percent of fingerprints in a system that supposedly has an error rate of one in a thousand. When the false match rate was one in a hundred, the DeepMasterPrints were able to mimic real prints 77 percent of the time.These synthetic prints could be most effective in bypassing a system with many fingerprints stored on it (as opposed to your phone, which probably has a record of a couple of your own digits). An attacker might have more chance of success through trial and error, similar to the way in which hackers run brute force or dictionary attacks against passwords.

Since they don't wrap around the shape of your finger, most scanners only detect a partial print. That's why you have to raise and lower your finger, and move it around when setting up TouchID on iOS or fingerprint unlocks on Android -- you won't place your finger on a scanner in exactly the same way every time.

Much of the time, biometric systems don't merge partial prints together to create a full image of your fingerprint. Instead, they compare scans against the partial records. That increases the likelihood that a bad actor could match a part of your print with a computer-generated one.

DeepMasterPrints also take advantage of the fact that, while full fingerprints are unique, they often share attributes. So a synthetic fingerprint that includes many of these common features has more chance of working than one that's completely randomized.

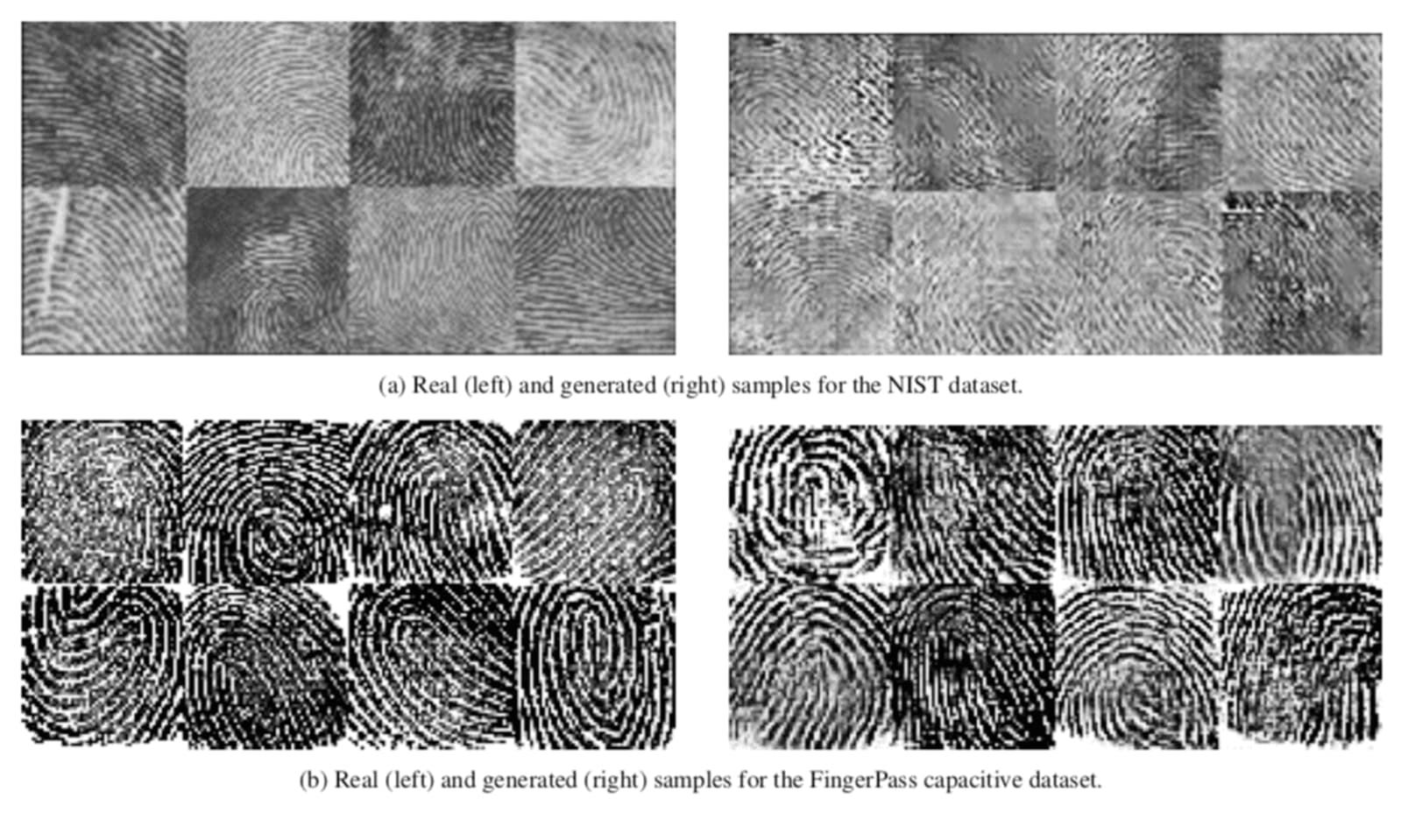

With those factors in mind, the researchers created a neural network that sought to create prints matching a range of partial fingerprints. They trained a generative adversarial network using a dataset of real prints.

The DeepMasterPrints look convincingly like actual fingerprints, so they could fool humans too. A previous method, MasterPrints, turned out phony prints with spiky, angled edges that would immediately strike a human as fake, even if they could dupe scanners.

The researchers hope their work will prompt companies to make biometric systems more secure. "Without verifying that a biometric comes from a real person, a lot of these adversarial attacks become possible," Philip Bontrager, of NYU's engineering school, told Gizmodo. "The real hope of work like this is to push toward liveness detection in biometric sensor."

0 comments:

Post a Comment